Neural Network Knowledge Distillation

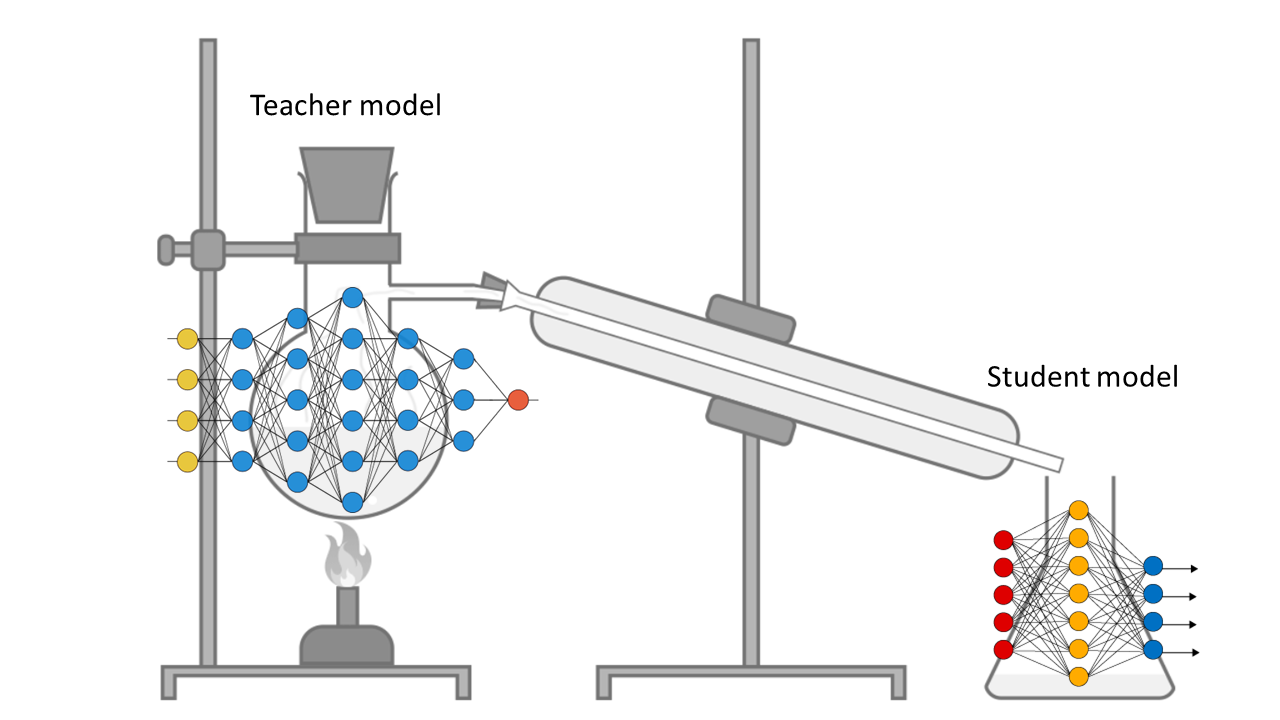

TensorFlow demonstration of the Knowledge Distillation framework to show how soft labels act as regularizers and a neural network’s knowledge can be transfered to a simpler model